How to Use GPT and Other Large Language Models Wisely¶

Info

GPT and other large language models (LLMs) are powerful tools, but they are not magic. They must be learned and used with care, just like any other technology. They can speed up work, inspire ideas, and fill knowledge gaps — but they cannot replace your own understanding.

LLMs Are Not Human Thinkers¶

An LLM does not “think” like a person. It predicts text by looking at patterns in huge amounts of data. When it seems like it is reasoning, it is really continuing the most likely sequence of words. This is why LLMs can produce convincing essays or explanations but sometimes fail at basic arithmetic. To the model, “1 2 3 4” is not an ordered sequence in the mathematical sense; it is just a common pattern of tokens that appear together when people talk about counting. That is also why sometimes it gives the right result for a calculation — not because it solved it, but because the correct number often follows in its training data.

Patches, Bridges, and Roads¶

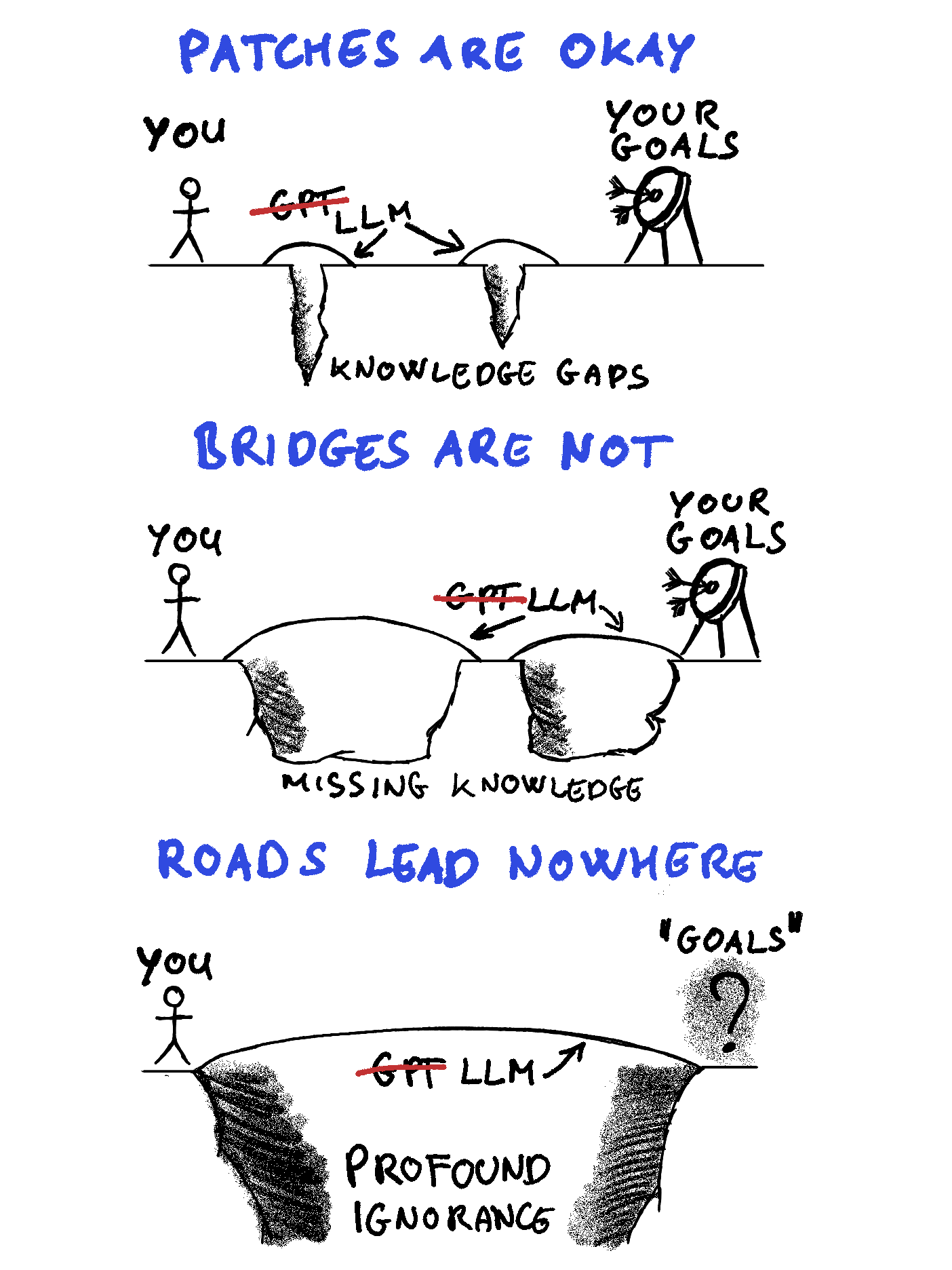

The infographic illustrates three ways of leaning on an LLM:

- Patches are okay: If you mostly know the material and only use the LLM to fill small gaps, the tool helps you move toward your goals.

- Bridges are not: If you depend on it to replace entire missing knowledge, you risk building on weak ground. The model gives an answer, but without your own understanding, you cannot judge if it is correct.

- Roads lead nowhere: If you know nothing at all and expect the LLM to carry you, you will be misled. Without direction or context, the answers can seem useful but leave you lost.

Danger

The key point: LLMs are useful as assistants, not as substitutes for your own learning.

Writing Good Prompts¶

To get better results, think of your prompt in three layers:

- Persona: tell the model who it should act as (e.g., “You are an experienced database teacher”).

- Context: explain the situation (e.g., “I am a student trying to learn SQL basics”).

- Task: give a clear instruction (e.g., “Explain how to write a SELECT query with a WHERE clause”).

This structure makes your request concrete and guides the model to stay on track.

Prompt template fields explained

- Persona – define who the model should act as (e.g. expert, teacher, reviewer).

- Current status of affairs – give background or context so the model knows the situation.

- Input for the current task – the actual material you want the model to work on (text, data, question).

- Output for the current task – describe the desired result (summary, list, code, essay).

- Examples – optional demonstrations of the format or style you expect (few-shot prompting).

- Chain of thoughts – hints to encourage step-by-step reasoning (“think step by step”, “list assumptions first”).

- Restrictions – limits such as word count, format, scope, or things to avoid.

- Emotional bias – tone and style (neutral, motivational, critical, humorous, etc.).

Chain of Thought¶

When people solve problems, they think step by step. LLMs can mimic this if you ask them to “explain reasoning step by step.” This is called a chain of thought. It improves accuracy for reasoning tasks, but remember — the model is still predicting likely text, not truly reasoning.

Zero-Shot and Few-Shot Learning¶

Large language models (LLMs) like GPT are trained on enormous amounts of text, but they are not trained directly for every possible question you might ask. Instead, they learn general patterns of how language works. Because of this, they can often handle completely new tasks — but how you ask the question makes a big difference.

Zero-shot learning means asking the model to do something without giving it any examples. You simply describe the task in words and expect the model to figure it out.

Example

Task: “Translate this text into French: I like apples.”

Answer: “J’aime les pommes.”

The model was never specifically taught your exact sentence, but because it has seen enough patterns of English–French text, it can generate the correct translation. Zero-shot is quick and simple, but it may produce mistakes if the task is complicated or unusual.

Few-shot learning means you first give the model a few examples of how the task should be done, and then you ask it to continue with a new case. By showing examples, you “teach” the model the pattern you expect right inside the prompt.

Example

1 2 3 4 5 6 7 | |

Because you gave several examples, the model understood the pattern: take an English word and output the French translation. This usually improves results, since the model doesn’t have to guess the format — it just continues the style you showed.

Tip

As a student, think of zero-shot and few-shot like asking a friend for help:

- Zero-shot is saying “Can you translate this word?” without any context.

- Few-shot is showing them how you want it written, then asking them to continue.

Few-shot prompting is especially powerful when you want consistency (same style, same structure, same format) across many answers. Even just two or three examples can make the output much more reliable.

Language Limitations¶

LLMs are generally better in English than in smaller languages. The reason is simple: English dominates the training data, so the model has seen far more examples. For less common languages, the training data is sparser, so the answers may be less reliable or fluent.

The Balance¶

Quote

An LLM is like a calculator for words. It speeds up routine work and helps fill gaps, but it cannot replace understanding, judgment, or critical thinking.

Use GPT as a tool:

- To brainstorm, rephrase, summarize, or generate drafts.

- To check your own knowledge or spark new ideas.

- To save time on repetitive tasks.

Do not use it:

- As your only source of truth.

- As a replacement for learning.

- As a shortcut to understanding complex ideas without effort.

With practice, you will learn when it is a patch to help you move forward — and when it risks becoming a bridge or a road to nowhere.